For the most part, the argument against climate change sceptics fails to aspire to anything greater than insult and contempt. However, every now and then, the debate takes on a more nuanced form of condescension, since it revolves around whether or not the average sceptic can tell the difference between science and what scientists get up to in their free time. Such debate often gets dressed up using philosophical talking points such as the difference between normal and Post-Normal Science (PNS). Sceptics cite climate science as a classic case of PNS and their critics usually respond by deriding the very concept. Lengthy and often tedious discussions follow, peppered with references to the likes of Kuhn, Popper, Funtowicz and Ravetz. It’s a recipe for unresolvable dispute and, quite frankly, it leaves me cold.

So, today I want to steer clear of the philosophy of science and, instead, present a distinction that I feel is far more to the point. It really doesn’t matter whether or not one sees a difference between a normal and post-normal version of science, all one really needs to see is that the climate change issue is not actually about science and a search for the truth. It’s about decision-making under uncertainty and the search for a rational decision. As such, the relevant distinction is the one existing between hypothesis testing, Signal Detection Theory (SDT) and decision theory.

Searching for truth

I won’t dwell too long on hypothesis testing because I suspect most readers will already be familiar with the concept. However, the following points need emphasising.

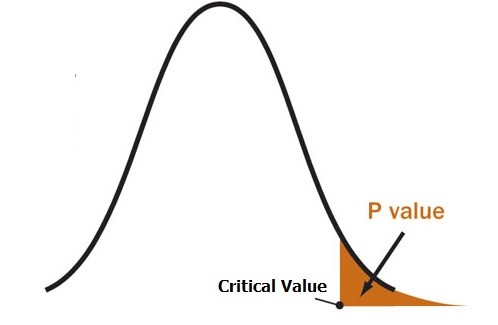

Hypothesis testing seeks to ascertain the statistical significance of the data, and for that purpose a so-called p-value is determined and compared against an agreed threshold. Here is a classic representation of the hypothesis testing problem:

The p-value tells you the probability of observing the data, in the event that the null hypothesis were true.1 And although an attempt to discount the null hypotheses is intended to determine the truth, the real outcome of the test is a statement of statistical significance, that is to say it is a statement regarding the adequacy of the data. If it isn’t sufficient to help you decide between your treasured hypothesis and the null hypothesis, then you have a decision to make: keep on collecting data in the hope that a strong enough signal emerges or abandon your hypothesis. Amongst other things, that decision depends upon how much noise you think you are dealing with. Which brings me on to Signal Detection Theory.

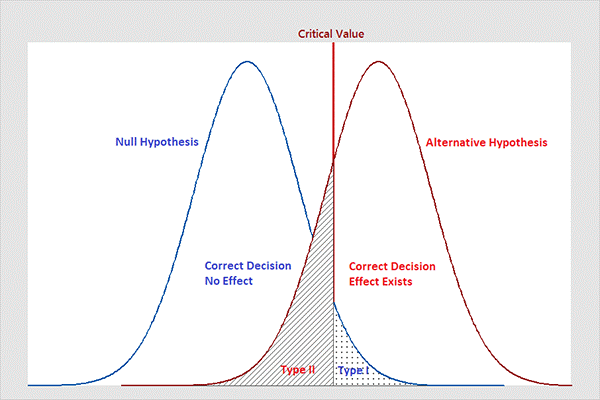

A common way of illustrating what is going on in an hypothesis test is to represent the posited and null hypotheses using two overlapping probability distributions, one for the noise (the null hypothesis) and the other for the signal (the alternative hypothesis). Hence:

This is a useful representation because it highlights an important point: for any given choice of ‘critical value’, there are two basic categories of error that can be made.

The first is the so-called Type I error, indicated by the area under the null hypothesis curve to the right of the critical value. This represents the probability that noise could be misread as signal, thereby causing the alternative hypothesis to be accepted in error. The second is the Type II error, indicated by the area under the alternative hypothesis curve to the left of the threshold. This represents the probability that a signal could be misread as noise, thereby causing the alternative hypothesis to be rejected in error. To reduce the likelihood of Type I errors one can readjust the critical value used to delineate statistical significance, but that will only increase the likelihood of Type II errors.2 Furthermore, both types of error come with a cost that boils down to value-laden judgement.

And this is the point where the scientific pursuit of truth merges into decision theory.

Making a decision

Although we are still talking about hypothesis testing and signal detection here, by introducing the concept of value we cannot avoid the topic of decision-making under uncertainty, in which the rationality of a decision is as much determined by notions of utility and risk as it is by data and statistical significance. It is for this reason that I suggest that decision theory provides the most appropriate framework for thinking about what is going on in the climate change debate.

Actually, it is perfectly natural that Signal Detection Theory (SDT) and decision theory should be mentioned in the same breath, since the former is just a sub-branch of the latter. SDT was first developed by radar researchers seeking to provide a mathematical basis for the detection of signals, in which there are obvious trade-offs to be made between missing a significant signal, on the one hand, and raising false alarms, on the other. Every time a radar operator interprets a blip on the screen, he or she is making a decision under such uncertainty. However, whereas SDT can be used to codify Type I and Type II errors, it cannot, on its own, tell you which to prefer. The radar operator’s dilemma is a classic case of a decision to be made under uncertainty, where values are disputed and time is of the essence. You can call that post normal science if you want, but that would be quite unnecessary; it is simply decision theory in practice.

Once the utility theory aspect of decision theory is added into the mix, it opens up the possibility of determining the correct decision according to a rationale, i.e. that of maximising utility. Take, for example, a decision made on the basis of a radar blip that may or may not indicate a threat. Here we have the following set of possibilities, in which ‘value’ can be a measure of a payoff or a penalty:

- The value of threat correctly detected (TCD)

- The value of threat correctly rejected (TCR)

- The value of threat missed (TM)

- The value of a false alarm (FA)

According to the decision theoretic approach, the correct decision-making strategy is the one that maximises utility by setting the critical value for believing a blip (β) as follows:

β = ((TCR – FA) x prob(noise)) / ((TCD – TM) x prob(signal))

Of course, in reality it can be very difficult to make such a calculation, particularly when the impacts of missing a threat and a false alarm are both potentially catastrophic, such as when the blip is possibly caused by an incoming salvo of ICBMs. Nevertheless, recognising and accepting the general form of the equation is an important first step towards understanding the nature of the problem. We are now a long way from simply testing a null hypothesis.

Tracking the climate change blip

Applying the β equation to the issue of climate change illustrates a number of points.

The first thing to understand is that there is no universal, correct value of β to be had. The equation shows that there is a relationship between the strength of evidence required and the imperative for taking alternative actions, but there is no reason to assume that exactly the same calculus applies to all people, or indeed at all levels of community. This effect is often described as a sign of motivated reasoning, as if that were a bad thing. The fact is, all reasoning is motivated, and it may or may not be rational depending only upon the extent to which the direction it takes is in keeping with the equation. When it comes to climate change, one person’s denial is another person’s precaution.

Secondly, it can be seen from the equation that two people may have very similar views regarding the signal to noise ratio and yet still differ profoundly regarding where they draw the line for action. This would be due, of course, to their differing evaluations of TCR, FA, TCD and TM. Both parties may accept the existence of climate change as a phenomenon because they both see a clear and distinct blip on their radar, and yet there are still factors to take into account before deciding the appropriate reaction. And it is this decision, based upon a personal calculation of β, that determines whether or not one is branded a denier. Consequently, seeing and accepting the blip on the screen is not good enough to escape accusations of blip denial.

The next point to appreciate is that we are not all looking at the same screen. When climate scientists look at their screen, the noise to signal ratio has a very physical interpretation. It is the analysis of data that enables them to discern weather from climate, to infer meaning from sea level measurements and temperature records, or read significance into the frequency or severity of extreme weather events. However, for the rest of us, the much more relevant signal to noise ratio is determined by observing the signal given out by a much vaunted scientific consensus, and comparing that with the noise represented by dissenting scientists. How reliable this second signal to noise ratio is depends very much upon one’s views regarding the filters used to achieve it. Yes, the first ratio plays a significant role in determining the second, but it certainly isn’t on its own in being an influence. This matters because it is the second ratio that enters into the β calculation that determines policy and the public’s level of support for it.

Finally, when it comes to the issue of climate change, there isn’t a single element of the β calculation that should be beyond disputation. And yet this debate is difficult to maintain when there isn’t even a universal acceptance of the equation to be applied. Talk to Extinction Rebellion, and you would think that β = (TCR – FA) x prob(noise), where prob(noise) = 0. Put another way, whatever anyone says about the benefits of correct rejection, or the cost of a false alarm, believe the blip!

But despite all of the above, whatever one may think about the equation, it is all rather academic now. The blip has appeared on the radar and panic has ensued. Great efforts have been made to alter people’s calculation of β to suit the mood music. In light of the harmony, it is getting increasingly difficult to get people to understand that the decision-making is actually a wicked problem resonant of cacophony. Even if one accepts that the science is closed, the calculation of β should still be an open issue. But that is not how it is. The blip was seen. It was interpreted as a salvo of incoming ICBMs. And the retaliatory response is already on its way.

Notes:

[1] Unfortunately, statements regarding the truth of the posited hypothesis are Bayesian posteriors that can’t actually be calculated, because null hypothesis testing doesn’t provide the necessary Bayesian prior.

[2] Altering the critical value is referred to as a response bias adjustment. Another way of influencing the number of Type I and Type II errors is to simply separate the probability distributions for the noise and the signal, i.e. improve the experimental setup.

Further Reading:

Utility and rational decision-making

Thanks for making me think. I think.

I stared at the following equation for quite some time. Is this the same as saying “the lower the value of beta, the more the evidence points to decisive action” or similar? Just looking at it you might think that if beta<1 it would pay to act.

Part of my confusion is due to the use of beta also as representative of Type II errors. Which also have the capacity to confuse. "The probability of not rejecting the null hypothesis when it is false" should be simple enough, but for some reason it's easier to get my head around when put in a table, or as in your figure of the overlapping pdfs. And (maybe this is just me) "the probability of rejecting the null hypothesis when it is true" (the alpha that we are familiar with, and which is widely abused) is a far easier concept.

All of this is slightly off-track, but I did want to mention that I am sure I have heard XR's Roger Hallam waffling on along the lines of, "I might be wrong. I might very well be wrong. But even if there's a small chance that I'm right, and [insert apocalyptic scene here], to [crash net zero or similar] is the only logical course." He seems to be saying that the probability of noise is high but that the consequence of signal is ginormous.

LikeLiked by 1 person

John, thanks also from me for making me think. I appreciated the technical stuff (always happy to try to learn), but your final paragraph expressed the current position beautifully. I might have had to stare at the screen very hard and engage brain cogs that have been dormant for a long time when reading the article, but I could relax for the final paragraph – a very neat summary.

LikeLiked by 2 people

The radar analogy might be good for illustrating decision theory, but I don’t think it applies very well to climate.

Time is of the essence? This is climate. Temperatures rising a little faster than they otherwise would, sea levels rising a little faster, perhaps storms getting a bit more energetic or frequent or more of one and less of the other, glaciers moving glacierly faster and so on with perhaps the worst case scenario being that the Earth turns into Venus over a millennial scale rather than a geological one.

With the incoming ICBMs, there’s no way to listen for an updated status report since they are presumably moving at supersonic speed, but with climate? There should be plenty of new data coming in all the time, new hypothesis, new technologies, .. lots of room to change course.

LikeLiked by 1 person

Mike D,

With regard to climate change:

Yes, of course, in theory. In practice, with regard to something like, say, net zero, I fear we are in danger of arriving at a tipping-point when it will be too late to change course (to use analogies much loved by climate alarmists). I am currently in the process of buying a new car. I want a diesel with a 2 litre engine (around 150 bhp) and a manual gear box (i.e. like the car I already have, which used to be a very common thing). Finding one is like looking for hens’ teeth. The car manufacturers are backing away from diesels at quite a rate of knots, and I suspect petrol engines will be uncommon soon. Once they’ve ceased making cars with ICE engines, it will be difficult, time-consuming and expensive to start producing them again.

It’s a similar story with regard to the other net zero policies. Once the UK politicians have had all their photo-ops blowing up coal-fired power stations (and I fear in due course gas ones too) there will probably be no way back for those reliable forms of energy generation. Once we’ve all been forced to rip out our gas boilers and at great expense install heat pumps in our homes, there’ll be no way back there either. Most people can’t afford to make that change and if forced to do so it will cripple them financially. They’ll certainly not be able to afford to re-install gas boilers thereafter, even if the infrastructure remains in place to make that a feasible thing to do.

And so on, and so on. We know there are no incoming climate ICBMs (or “carbon bombs”) but the authorities are behaving as if there are. And that’s a very large part of the problem.

LikeLiked by 3 people

Jit,

It looks like you have basically understood what I am trying to say.

The first thing I feel I should acknowledge is that Signal Detection Theory is not just a subset of decision theory but is also a subset of psychology, i.e. it analyses how people respond to stimuli which are supposed to motivate them to act. The criterion for response (β) is a measure of how conservative or trigger happy someone may be when placed in the position of having to decide whether a stimulus is just noise or a significant signal to be acted upon. As such, it is not just a function of the noise to signal ratio, since it is also influenced by what is perceived to be at stake (a psychological state). An individual will set their β accordingly, thereby influencing the likelihood of Type I and Type II errors. My article is taking SDT and the metaphor of the radar screen in order to suggest how societal perception may be working in controversial areas such as climate change.

The equation provided in my article was obtained from the Pinker book I reviewed in my previous one. It shows how expected utility calculations are supposed to dictate a rational allocation of a β value. Whilst he does not provide its derivation, he does demonstrate how its general form would work in practice. If, however, you want to know a bit more about how β works, I have found the following useful summary:

https://elvers.us/perception/sdtGraphic/

It includes the following important passage:

“β is the signal detection theory’s measure of response bias — how willing the observer is to say that the signal was present. β is defined as the ratio of the height of the signal plus noise distribution at the criterion to the height of the noise distribution at the criterion. As the criterion gets larger, β gets larger and the observer is said to be more conservative. As the criterion gets smaller, β gets closer to 0 and the observer is said to be liberal. Values of β from 0 to, but not including 1, are said to be liberal, while values of β greater than 1 are said to be conservative. Because of the asymmetry of β the base 10 logarithm of β is often used instead. If the base 10 logarithm of β is less than 0, the observer is liberal. If the base 10 logarithm of β is greater than 0, the observer is conservative. When β equals 1 or the base 10 logarithm of β equals 0, the observer is unbiased — neither liberal nor conservative.”

LikeLike

Mike,

I apologise for not having made myself clearer. By saying time is of the essence in both cases I wasn’t trying to make a statement regarding the timescale for a response. I was simply trying to make the point that, when stakes are deemed to be high, and when there is uncertainty to be dealt with, waiting to see a clearer picture may not always be deemed the best strategy. This acts out very quickly in the example of the radar operator and much more slowly with regard to climate science, but I believe the principle of time-constrained decision-making applies equally.

LikeLiked by 1 person

Mark,

Yes, that is precisely my point. Someone shouted ‘fire’ and we are all piling up at a locked fire exit.

LikeLiked by 1 person

Cli Sci… Calling fire at a crowded theatre.

LikeLike

Beth,

Yes I know. And I wouldn’t mind but I was quite enjoying the show.

LikeLike

I don’t know why it didn’t occur to me to do this earlier, but this morning I decided to play a round of Climate Change Only Connect, and I came across the following two research papers. The first uses signal detection theory to investigate layperson attitudes towards hurricane reports as evidence of global warming:

https://journals.ametsoc.org/view/journals/wcas/12/3/wcasD190132.xml

The second does the same with regard to heatwaves:

https://journals.ametsoc.org/view/journals/wcas/9/3/wcas-d-17-0003_1.xml

I won’t comment on them right now. For the time being, it’s just nice to see that I hadn’t thought up an idea that lacks a scientific basis. The other thing to be borne in mind is that my article doesn’t stop at the psychology of personal judgement but also speculates that the SDT concept can be used to evaluate societal judgement.

LikeLike

It’s taken me a while to get around to it but I now have something to say regarding the two papers I cited above.

In both cases, the authors fail to make a connection between SDT and utility theory. Consequently, both papers observe how a personal adoption of the response bias threshold (β) reflects in attitudes to climate signal data, but neither attempts to explain the rationale behind the choice of β (other than to observe that numeracy seems to correlate with a high β). Consequently, I don’t think either paper throws any light on how values influence the psychological state leading to a particular responsiveness to signal data. The phenomenon is simply taken as a given: some people need a much bigger signal to noise ratio in order to be responsive.

All of this is reminiscent of the cognitive bias known as ‘slothful induction’. Just to remind you, slothful induction is a reluctance to infer a conclusion from data, with the individual concerned preferring instead to see the effect as being the result of noise. To put it in SDT terminology, somebody who exhibits slothful induction is operating with a high β. However, there is an implication in the adjective ‘slothful’ that the choice of β is irrationally high because it cannot be justified given the signal to noise ratio concerned. What I hope I have succeeded in explaining in my article is that the rationality is not just dependent upon a respect for a given signal to noise ratio but also has to take into account the values attributed to Type I and Type II errors. No particular response bias can therefore objectively said to be irrational because it is ultimately a subjective, value-laden calculus. Besides which, I could add that slothful induction is not as common as its opposite, i.e. the ‘theorising disease’ coined by Nassim Taleb, in which (to use SDT terminology) β is held to be irrationally small.

None of this means anything to John Cook, of course. He still thinks slothful induction is a type of cherry-picking, so there is no hope that he will ever understand any of this. He understands that slothful induction is associated with climate change scepticism and that is enough in his book for it to be a form of irrationality to add to his FLICC taxonomy, one way or another.

LikeLiked by 1 person

Those of you who have read my more recent article ‘A little Less Conversation’ will know that I have just started to read a book about the neuroscience of information overload. However, the following extracts I have just read from that book struck me as being more relevant here:

“Drugs such as guanfacine (brand names Tenex and Intuniv) and clonidine, that are prescribed for hypertension, ADHD, and anxiety disorders can block noradrenaline release, and in turn block your alerting to warning signals. If you’re a sonar operator in a submarine, or a forest ranger on fire watch, you want your alerting system to be functioning at full capacity. But if you are suffering from a disorder that causes you to hear noises that aren’t there, you want to attenuate the warning system, and guanfacine can do this.”

And then there is this:

“Nicotinic receptors are so named because they respond to nicotine, whether smoked or chewed, and they’re spread throughout the brain. For all the problems it causes to our overall health, it’s well established that nicotine can improve the rate of signal detection when a person has been misdirected – that is, nicotine creates a state of vigilance that allows one to become more detail oriented and less dependent upon top-down expectations.”

So, it is clear that drugs can make you more or less jumpy when looking for signals within noise. Or, dare I say, make you more or less alarmist. I would be fascinated to learn how pre-held values and personality type may similarly affect the brain chemistry. However, none of this alters the main theme of my article. Whichever way you look at it, one’s response bias should be set in accordance with a desire to maximise expected value. If it isn’t, only then could it be said to be irrationally set. Taking drugs seems to be good way of encouraging such a miscalculation.

LikeLiked by 1 person