Gather round boys and girls, because I want to tell you a story. It is a tale of two fearsome warriors engaged in a battle for your ecological soul. The first was an esteemed expert in all matters climatological and psychological. For the purposes of the tale, I will call him Stephan Lewandowsky. The second was a notorious denier of science who sought to overthrow the received wisdom of the many, with specious hand-waving arguments that flew in the face of all that is known of physics and basic mathematics. For the purposes of the tale, I will call him Ben Pile. Hear me now as I recount the day when they duelled at dawn, ably refereed by the world’s most impartial and open-minded moderator, who, for the purposes of the tale, I will call Willard. If you want to know who emerges victorious, you can cheat and go straight to this article’s punchline. But if you want to know why, then you are going to have to be more patient.

You see, it all began when Stephan issued a grand proclamation for all the land to read. In it, he was able to demonstrate, with little more than basic mathematics, that when uncertainty increases, so does the risk. With a clarity of thought that most of us only dream of possessing, Stephan carefully (and patiently) explained that those of us who argued that nothing can be said regarding risk under conditions of high uncertainty were mistaken. This was because they were failing to take into account that the loss function was marked by a concave curve. Or was it convex? I don’t remember, but the point is that everyone knew (and by that I mean all economists knew) that the cost of risk mitigation rises non-linearly with the risk of warming. Consequently, a greater spread of future possibilities will allow for potentially high mitigation costs at the high impact end of the uncertainty spread, yet these are not offset by correspondingly lower costs at the low impact end. This can only mean that the economic risk rises as uncertainty increases.

Ben wasn’t impressed and accused Stephan of simply re-inventing something called the precautionary principle. Now Willard, as you will recall, is a very fair individual who is very cautious about drawing conclusions unless he can be certain of them. So he cited the adjudication of a wise old sage called James, who was more than happy to point out the ‘really strange’ nature of Ben’s protests. It turns out that Ben had failed to notice that Stephan had only used basic mathematics to prove his point – it had nothing to do with the precautionary principle. It seems that Ben’s duelling pistol had jammed at the critical moment, whilst Stephan had fired a silver bullet. Willard, duly impressed by the calibre and metal of that projectile was more than happy to declare Stephan the winner.

However, what Willard and his wise old sage had failed to notice was that Stephan’s bullet, far from piercing the blackened heart of the evil denialist, had embedded itself in an innocent tree that had strayed too close to the action.

Regrettably, we must leave that tree1 to its slow and lingering death, as it cries out to its Mother Earth, and concentrate instead on what could possibly have caused Stephan’s perfectly aimed weapon to have failed in its holy mission. And to do this we have to start by recognising two key facts:

- As uncertainty increases, so does our inability to reliably quantify probability, and hence quantify the risk levels

- As uncertainty increases, so does the risk of being risk inefficient

The first point was understood by Stephan and the wise old sage (history does not record what Willard understood). But just because one cannot quantify a risk, that doesn’t mean that one cannot still know that it has to be higher than the known, quantifiable risks. And that, surely, was the point.

Due to uncertainty, we may not know just how bad it is going to be, but we know that the greater the uncertainty, the worse it has to be. However, Stephan was seemingly unaware that, by allowing a circumstance in which risk could no longer be reliably calculated, he had simply swapped risk aversion for uncertainty aversion, and this had actually been Ben’s point. Nevertheless, to Ben’s adversaries, his objections had been no more than ‘vacuous hyperbole’; whatever you call the aversion, it could still be used as a rational basis for a call-to-arms to mitigate the risk – whatever that might be.

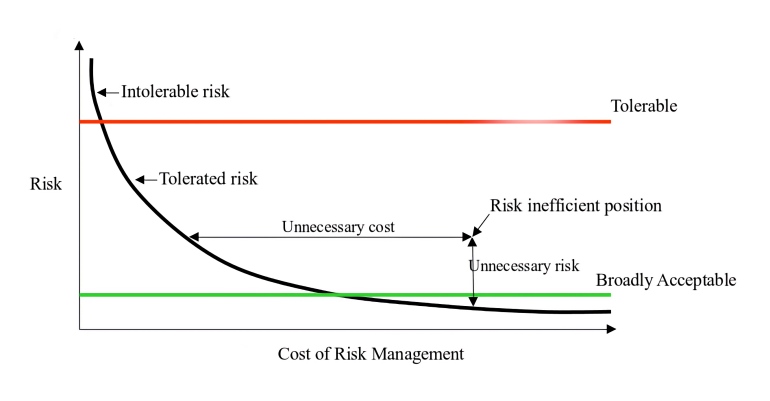

More importantly, what Stephan had not taken into account was the second point, and what it says regarding the relationship between uncertainty and risk efficiency. In fact, risk efficiency formed no part of Stephan’s argument or the sage’s observations. Which is a shame, because the first thing one is taught as a risk manager is that the risk manager’s obligation is not to minimise risk but to maximise risk efficiency. Perhaps it is time that I explain what risk efficiency is. To do that, I am going to break the habit of a lifetime and offer you a diagram:

This diagram conveys the idea that any level of risk can be driven down to an arbitrarily low value by investing further in risk reduction (or conversely by being sufficiently risk averse and accepting the cost of lost opportunity). However, it is likely that the law of diminishing returns applies here, hence the general shape of the risk reduction curve. This curve also represents the pareto optima, i.e. when you are on the curve, the only way that you can reduce the cost is by increasing the risk, and vice versa. The region to the left of the curve is, by definition, unreachable. The region between the ‘Tolerable’ and ‘Broadly Acceptable’ thresholds is known as the ALARP region (where risk is As Low As Reasonably Practicable). Where you draw these thresholds on the diagram is a measure of your risk aversion. Where you choose to settle on the curve, within the ALARP region, represents a professional judgement taken in the face of practical considerations.

This diagram conveys the idea that any level of risk can be driven down to an arbitrarily low value by investing further in risk reduction (or conversely by being sufficiently risk averse and accepting the cost of lost opportunity). However, it is likely that the law of diminishing returns applies here, hence the general shape of the risk reduction curve. This curve also represents the pareto optima, i.e. when you are on the curve, the only way that you can reduce the cost is by increasing the risk, and vice versa. The region to the left of the curve is, by definition, unreachable. The region between the ‘Tolerable’ and ‘Broadly Acceptable’ thresholds is known as the ALARP region (where risk is As Low As Reasonably Practicable). Where you draw these thresholds on the diagram is a measure of your risk aversion. Where you choose to settle on the curve, within the ALARP region, represents a professional judgement taken in the face of practical considerations.

So far, so good. Unfortunately, there are two fictions involved in this story. Firstly, the pareto optima curve is hypothetical. In the real world, it is highly unlikely that you will be able to plot the curve and, even if you could, it would probably not be as well-behaved a function as I have shown here. Secondly, in the real world you will never actually be on this curve; you will instead find yourself somewhere to the right of it. The extent to which you are adrift is known as your Risk Efficiency, and it manifests as unnecessarily high residual risk for a given risk mitigation investment.

So what are the factors that prevent the risk manager from ensuring that we are sat on the pareto optima curve? The simple answer is ‘uncertainty’. Yes, one can wantonly invest unwisely on management strategies that are known to be ineffective, but it is more normally the case that risk inefficiency is an accident born of ignorance, aka epistemic uncertainty. One usually discovers one’s risk inefficiency after the sure-fire remedy has failed. You thought you understood the risks, their magnitudes and their causations, but it turns out you didn’t!

If I could sum up the respective positions of the sceptical and alarmist camps in one slogan, it might be this:

Alarmists are more worried about the risk but sceptics are just as worried about risk efficiency

Contrary to Stephan’s understanding, uncertainty and its effects cannot be fully captured simply by looking at probability distributions relating to a loss function (pretending in the process that an aleatory analysis will serve for all types of uncertainty). This is a restricted understanding of uncertainty that only gives the appearance of invalidating the sceptics’ concerns. Rather, sceptics do have a legitimate interest in worrying about the uncertainty levels, if only because the levels are thwarting meaningful risk efficiency calculations. The worrying thing is that you can do more harm through being risk inefficient than by not being sufficiently risk averse. That’s why risk managers focus upon risk efficiency so much.

Of course, this doesn’t answer the question regarding which of the two concerns is the more important when it comes to climate change: Is it the potential increase in risk caused by uncertainty, or the potential decrease in risk efficiency, also caused by uncertainty? Well, the problem with this question is that it cannot be answered straightforwardly. We have long since left behind the realms where ‘simple mathematics’ can provide an answer. It’s not just a lack of understanding of the formal relationship existing between uncertainty and risk efficiency, it’s not just the uncertainties regarding the probabilities upon which risk calculation is based; it’s not just the uncertainties regarding the form of the pareto optima curve for risk reduction; it’s not even the uncertainties that have been smartly overlooked by the economists that claim they understand the general form of the loss function. It is, instead, a problem resulting from all of the above. Uncertainty is trashing the whole show and (for all we know) one could end up spending a fortune on risk mitigation actions for a problem that is not nearly as serious as is claimed, cannot be addressed by the chosen mitigations, and can end up transforming into a new problem that is just as bad as the one we seek to avoid. There again, everything could be fine as long as we listen to Stephan and James.

Back at the duel, Stephan and Willard were looking perplexed. Stephan had aimed his gun straight and true, and yet Ben refused to go down. Willard thought this bizarre, until he remembered an important fact: Ben is just another denier who has been slain but doesn’t realise it. He walks on, like a zombie, denying the undeniable fact of his demise. Stephan had delivered his silver bullet, but you can’t kill zombies with a silver bullet; that’s for werewolves. The only way to kill a zombie is to decapitate it. Lacking a suitable instrument to perform such a gruesome deed, Stephan and Willard walked away, bemused but entertained by Ben’s ridiculous ‘survival’. Only Ben and the overly inquisitive tree seemed to know that Stephan had missed his target.

Stephan lost the duel, boys and girls, but that’s what you get when you underestimate the power of uncertainty. You can’t expect the unexpected.

[1] Don’t worry, boys and girls, it wasn’t Mann’s magic bristlecone pine tree.

The Lew has reinvented the concept of risk.

Which is an interesting invention from a guy who has no background in risk management, and whose authored papers show a misuse of stats and data.

LikeLike

Some of you may have noticed that there is a technical glitch on the diagram (an arrow pointing in the wrong direction). CliScep’s massive IT support team is working on the problem as we speak.

LikeLike

The link at “…more than happy to point out” goes to an article by James Annan supporting Lew against Ben. There are many comments from “Anonymous” under the article, most and possibly all of which were by me. (Hence all the replies to “Geoff.”) The point I was trying rather clumsily to make is that Lew’s argument, whatever its failings from the point of views of statistics or risk theory, is mystical nonsense, since it claims to derive a truth about the real world (increased probability of something happening) from the purely geometrical (or arithmetical, if you like) properties of a graph.

Briefly, if you take a fat tailed graph of probabilities of x happening, and squash the mode (most frequently mentioned, i.e. most “likely” value) down in order to indicate greater uncertainty, then the fat tail drifts off to the right, taking the mean with it, and the increased distance between mean and mode indicates greater probability of it being worse than you think.

The flaw in Lewandowsky’s argument is his assumption that climate sensitivity cannot take a negative value, so he constrains the distribution at zero. I ignored this error, because I wanted to get at his outrageous misuse of the necessary truths of mathematics to “prove” a purely contingent proposition, something which nobody has attempted since Kant, or possibly since St Anselm, in his second argument for the Existence of God. Flailing around for an example of a fat-tailed distribution constrained at zero, I hit on bra sizes, which can go from an AA cup to the furthest reaches of your imagination. I pointed out that, while it may be possible for a domain expert to make a reasonable stab at the true size if the lady is wearing a frock, if she then increases uncertainty by donning a heavy woolly sweater, this in no way justifies claiming that a larger size has therefore become more likely. Despite some support from Lucia Liljegren, I got scoffed off the page, and my comments suppressed.

Vinny Burgoo had the last word, pointing out that Lew’s argument was tautologous, and I’d got my Monte Carlos mixed up with my skewed distribution.

LikeLiked by 1 person

“Some of you may have noticed that there is a technical glitch on the diagram (an arrow pointing in the wrong direction). CliScep’s massive IT support team is working on the problem as we speak.”

Update: Apparently he isn’t.

LikeLike

JOHN RIDGWAY

You say:

and:

This sounds to me like the logical error I was battling against at Annan’s, and frequently against Lew elsewhere. Uncertainty is a psychological state. You can give it an arithmetical value if you like, and then do anything you like with it statistically. But you can’t infer anything about the real world from a change in your psychological state, without “something else,” i.e. evidence from the real world.

A simple example:

We, the Cliscep gang, are standing on a hilltop trying to gauge how far it is to the pub in the valley. We’re a sharp-eyed lot in the main, trained in the boy scouts, and capable of producing a sharp-peaked consensual probability curve. But there’s a minority of pessimistic old grouches who think it’s much further off than the others, producing a fat tail to the estimates. A mist falls (climate change again) and our reason falters, our guesses become more hazardous, and the grouchers more pessimistic than ever. Mean and mode part company. Uncertainty increases. But the pub hasn’t got any further away.

LikeLiked by 1 person

On the graph, I still haven’t worked out which arrow head is wrong. It looks like pure hyperbole to me. Surely the cost of risk management goes up with the risk? So is there a -1 missing somewhere?

LikeLike

‘I’ll be judge, i’ll be jury’, said cunning old Fury.

LikeLiked by 1 person

Thank you for saying that and for explaining it.

LikeLiked by 1 person

Geoff,

The misaligned arrow is the annotation arrow used to indicate the risk inefficient point on the graph. It should be pointing down to the left, not the right. Converting the diagram from Word seems to have caused a reflection of this element of the diagram.

The x-axis represents the cost expended, not the cost required.

Your example only refers to epistemic uncertainty. It takes no account of aleatory or ontological uncertainty.

Lewandowsky’s argument appertains to the calculation of expected value, as per standard utility theory. As such it is almost a mathematical triviality. It simply points out that a non-linearly increasing loss function will always lead to an increase in calculated expected value as the spread of the probability density function increases. I have no argument with that. I even accept that the mathematics applies irrespective of whether the probabilities entailed are objective or subjective (the expected value calculation doesn’t care). However, the choice of cost function is critical to the argument and only an ordinal argument can be made in this way. As soon as one attempts to make cardinal judgements regarding the risk levels under uncertainty, one is thwarted by the fact that the increase in uncertainty has rendered reliable calculation of the probabilities impossible. Hence the need to resort to uncertainty aversion. Nevertheless, this is not the real flaw in Lewandowsky’s argument. The real flaw is his failure to consider the importance of risk efficiency. This is a typical mistake made by individuals who do not have a risk management background.

LikeLike

Nice post. Unfortunately, Stephan is not the only crusader on that cause.

I thought the comment from nullius in verba, which I noticed as related at Climate Etc way back when, was useful regarding this line:

The Precautionary Principle in the absence of quantified risks is equivalent to Pascal’s Wager. It means that the decision is determined entirely by the scariness of the hypotheses being offered rather than the strength of the evidence. Usually a false dilemma is being offered – two scenarios, one scary, one not, when there are many more scenarios possible (and more likely)… …The analogy is fairly straightforward. The Precautionary Principle as commonly applied to climate change says that even if you’re not fully convinced that it will definitely happen, if you accept that it might happen, the costs are so high (e.g. Ted Turner’s cannibal scenario) that it’s still the only rational choice to act to prevent it. Pascal’s Wager applied to the Christian afterlife mythology says that even if you’re not fully convinced that it will definitely happen, the costs (eternal torment versus eternal bliss) are so high that the only rational choice is to believe. The distinctive features of the argument are that it offers only two alternatives with the putative costs embedded the hypothesis, and the conclusion arises from the hypothesised costs alone, not the evidence. https://judithcurry.com/2011/05/28/uncertainty-risk-and-inaction/

LikeLike

JOHN

I understand that it only refers to epistemic uncertainty, and that’s the sort Lewandowsky is dealing with. My point is far more general than any question as to whether Lewandowsky has, or should have, considered risk efficiency. It is that he is claiming that changes in the real world can be effected by changes in the mental states of people (in this case, their estimates of climate sensitivity.) Of course, one or more of these estimates may correspond to the correct figure for the “real” climate sensitivity. Nonetheless, it remains what it is, an idea, a thought, a guess – something in someone’s head, a data point on his graph. And nothing that Lewandowsky does with such a graph can change the “real” figure for climate sensitivity.

He argues that increasing uncertainty would mean that sensitivity “is” greater than it was, or would have been, otherwise. It follows that greater certainty (brought about by better measuring devices etc.) means that climate sensitivity will necessarily be less than we currently think it is, in our present state of uncertainty.

I’ve been pointing out for ages that this is madness, and I’ve yet to have anyone agree with me, or point out where I’m going wrong.

LikeLike

Hold on, isn’t the point being made very simple? If you have a convex cost function (for example, C = a T^2) then if there is some uncertainty in T, the expected cost is bigger than the mean cost. Also, this difference increases as uncertainty increases. In other words, the larger the uncertainty, the larger the expected cost.

LikeLike

Cli-sci, subjective versus objective, what does the data show, and that doesn’t mean the model ass-umptions? Lol. https://beththeserf.wordpress.com/2018/12/23/56th-edition-serf-under_ground-journal/

LikeLiked by 1 person

Thanks for that Andy.

Lewandowsky’s argument is an ordinal one, in which it matters not whether the probabilities are objective or subjective. Therefore it isn’t ambiguity aversion that leads him to his conclusion. However, neither is the conclusion an adequate justification for denying that the precautionary principle is being applied. Rather, it is just an argument for why, on this occasion, the precautionary principle has a stronger rationale than is usually the case (although this is not what he thinks he is arguing). It is all very well saying that the expected value will increase as the pdf spreads, but the spread of the pdf is not the only expression of uncertainty. Just as important is the reduced reliability of the probability calculations that are necessary before one can say if one is being risk averse with respect to any posited outcome. Without a cardinal understanding of likelihoods one cannot establish a sensible risk management policy, other than one based upon a generalised fear and a sense of urgency.

Anyway, this is all a bit academic because Lewandowsky’s failure to take risk efficiency into account undermines his conclusion that greater uncertainty supports the alarmist position. As soon as uncertainty clouds the calculation of risk inefficiency, the door is open to apply the ordinal argument against itself; so it becomes the precautionary principle versus the precautionary principle.

LikeLike

John,

Can I clarify something. In this particular case, are you agreeing with Ben Pile?

LikeLike

Prof. Rice,

You appear to have fully understood Lewandowsky’s argument and totally failed to understand mine. Can you tell me what the risk inefficiency cost function looks like in comparison to that of the expected cost? Lewandowsky’s argument is not simple, it is simplistic.

LikeLike

John,

As far as I can tell, I do understand yours, but I don’t think it somehow refutes Stephan’s point about uncertainty. I am still interested in whether or not you agree with Ben in this instance.

LikeLike

So, is risk and uncertainty measured in pounds and kilos, or yards and meters?

LikeLike

Prof. Rice,

As I explain in the article, I agree to the extent that Ben claims that the Lewandowsky argument is a version of the precautionary principle. The ordinal basis for Lew’s argument strengthens the justification for application of the pp, but the lack of cardinal assessment of risk still places the argument in the precautionary camp. I also claim that Lew misses the point regarding uncertainty. Greater uncertainty increases both the original risk and the risk of any proposed mitigation being risk inefficient. Without an understanding of the relationship existing between uncertainty and risk efficiency, and between risk efficiency and any concomitant risk, one cannot draw any conclusions regarding the imperative to act if those conclusions are based solely upon the relationship between uncertainty and the original risk. Remember, the argument was that sceptics are wrong to claim that uncertainty should be reduced before action should be decided.

LikeLike

John,

Okay, thanks.

LikeLike

Geoff,

“He argues that increasing uncertainty would mean that sensitivity “is” greater than it was, or would have been, otherwise.”

I don’t believe he does argue this, though at times his choice of words does give that impression. The mathematics he uses is that of utility theory and the calculation of the expected value, which is a probability weighted average of all possible values. Mathematically, this value does increase, inter alia, when the probability distribution widens (but only if a non-linearly rising relationship is assumed between cost and warming). However, nothing is being said about the reality – the expected value is still, as you would say, a mental state. So the widening of the pdf actually changes two mental states: the state of uncertainty and the state of expectation. As you rightly point out, the reality is what it is and remains impervious to expectation. Unfortunately, lacking the required time machine, policies have to be based upon expectation.

LikeLike

What about the risk of believing there’s a risk which in fact isn’t there?

LikeLike

This is all rather beside the point anyway. Lew was arguing this rather fanciful notion of a greater risk of catastrophe because of fat-tail high end climate sensitivity estimates back in 2012. In 2014, Nic Lewis demonstrated, using the IPCC’s own underlying estimates of forcing and heat uptake, that the climate sensitivity probability distribution became considerably narrower as a result, thus implying a diminishing probability of an extreme atmospheric response to increasing CO2.

AR5 does not give a 95% bound for ECS, but its 90% bound of 6°C is double that of 3.0°C for our study, based on the preferred 1859–1882 and 1995–2011 periods.

Considerable care was taken to allow for all relevant uncertainties. One reviewer applauded “the very thorough analysis that has been done and the attempt at clearly and carefully accounting for uncertainties”, whilst another commented that the paper provides “a state of the art update of the energy balance estimates including a comprehensive treatment of the AR5 data and assessments”.

Earlier sensitivity studies based on observed warming during the instrumental period (post 1850) have generally used forcing estimates derived from one or more global climate models (GCMs), particular published studies and/or the forcing estimates given in AR4 – which were only for a single year. Our paper appears to be the first to use the comprehensive set of forcing time series and uncertainty ranges provided in AR5. They are based on careful assessments of forcing estimates from various published studies, and are likely to become widely used in observationally-based sensitivity studies.

There is thus now solid peer-reviewed evidence showing that the underlying forcing and heat uptake estimates in AR5 support narrower ‘likely’ ranges for ECS and TCR with far lower upper limits than per the AR5 observationally-based ‘likely’ ranges of: 2.45°C vs 4.5°C for ECS and 1.8°C vs 2.5°C for TCR. The new energy budget estimates incorporate the extremely wide AR5 aerosol forcing uncertainty range – the dominant contribution to uncertainty in the ECS and TCR estimates – as well as thorough allowance for uncertainty in other forcing components, in heat uptake and surface temperature, and for internal variability. The ‘likely’ ranges they give for ECS and TCR can properly be compared with the AR5 Chapter 10 ‘likely’ ranges that reflect only observationally-based studies, shown in Table 1. The AR5 overall assessment ranges are the same.

Solid science combined with actual observations can be a real bugger when you’re trying to promote a hypothetical risk-based catastrophe using statistical jiggery-pokery. But I doubt Lew would know too much about solid science, or want to know.

https://climateaudit.org/2014/09/24/the-implications-for-climate-sensitivity-of-ar5-forcing-and-heat-uptake-estimates-2/

LikeLike

Jaime,

This is what modellers were saying back in 1990:

“What they were very keen for us to do at IPCC [1990], and modellers refused and we didn’t do it, was to say we’ve got this range 1.5 – 4.5°C, what are the probability limits of that? You can’t do it. It’s not the same as experimental error. The range is nothing to do with probability – it is not a normal distribution or a skewed distribution. Who knows what it is?”

By 2014 probability limits were all the rage it seems. What changed? Could it be that “solid science combined with actual observations” became no substitute for understanding what can and cannot be achieved by using analytical techniques designed to model aleatory uncertainty?

LikeLike

John, analytical techniques designed to model aleatory uncertainty cannot be a substitute for solid science combined with actual observations, or vice versa. One can choose to focus on one or the other (or even both) in order to get some idea of what may or may not happen in the future and how likely (or not) our projections of possible futures may be, and whether or not current and proposed policy is commensurate with those projections. From my point of view, if observations combined with energy balance models are consistently generating estimates of transient and equilibrium climate sensitivity that are towards the lower end of the spectrum, whilst also diminishing the probability of high end estimates, then that is good enough reason to urgently re-weigh the putative diminished risk of climate catastrophe against the costs of mitigation.

LikeLiked by 2 people

— Can I clarify something. In this particular case, are you agreeing with Ben Pile?—

Heaven forfend!

LikeLiked by 1 person

This discussion has not really dealt with the different perceptions of uncertainty. Among the broad climate alarmist community are two extremes. At one extreme there is the Children’s Crusade and Extinction Rebellion types who think the world will end unless “we” have zero emissions by Thursday week. On the other extreme are the likes of William Nordhaus who project that the costs of unmitigated climate change in 2100 will be 4% of global output, when global output is about 10 times higher than today. The difference between the two is between amplifying vague opinions and actually doing the work to understand and specify the problem, stating any assumptions.

LikeLiked by 1 person

One question worth discussing is how much of GDP is spent today, or has been spent historically, on climate risks?

Seawalls, Levies(barrages) flood damage repair, dams, canals, etc. can all be thought of as either adaptation or mitigation of climate risks

So what do we spend on these now and what can we expect to spend on them in the future with or without dramatic climate change?

Lew, and the rest of the catastrophists pushing the “12 years to save Earth” are not serious thinkers. They are the Pol Pot/ Khmer Rouge of climate, pushing pastoralist nonsense that is anti-scientific, irrational and destructive.

The cowardly consensus, as we see from Prof. Rice and others, cannot even contemplate honestly that their extremism could be misplaced.

But perhaps a way forward out of catastrophist apocalyptic bullshit served so frantically would be to focus on actual infrastructure needs for the future.

Unlike climate doom predictions, infrastructure needs can be discussed calmly by people of good will.

Only green extremists in n the pastoralist catastrophe camp will object.

LikeLike

MB,

I’m not sure 4% of global output is alarmist, if we are to believe the projections of economists who say global output will have grown vastly by 2100, because in that case we won’t notice the 4%. Unless the 4% was intended to be in real terms as pegged to current output (i.e. there is no growth).

I’m not sure that a lot of work to analyse all the perceptions of uncertainty and the relative weight of each, would be worthwhile (although interesting). The dominant authority narrative is a certainty of catastrophe (absent dramatic action): https://wearenarrative.wordpress.com/2018/11/15/the-catastrophe-narrative/ …which although at the more forceful end of expression, the orgs that you mention above are aligned to. Given this narrative is not supported by mainstream science, then clearly more uncertainties are acknowledged as we travel further from the public domain and nearer to the reality constraints of science (even biased science has more constraints than the free evolution of emotive memes in the public sphere, which is how such an influential authority narrative arose in the first place; the ‘amplification of vague opinion’ as you put it, the amplification being emotive in nature). The gradation from less to more constraints can even be seen even in a single org (the IPCC), per the Rossiter model (see the footnotes at the link above). And as those reality constraints start to bite, there are various different perceptions of which the Lew interpretation here is probably of the most tenuous stripe that can still claim scientific underpinning with *some* credibility (it has clearly received some), notwithstanding all John’s valid challenges. And as John has noted in this parish before, uncertainty principle approaches gain more weight away from the public domain and further into reality constraints (which also include legal / policy as well as scientific realities), albeit some of these approaches are essentially only foils that still allow acceptable allegiance to a cultural belief in catastrophe (and so emotive conviction) but via a less obviously incorrect route.

I think that when you have enormous emotive pressure from the public domain (top authority sources right down to grass roots), and after decades widespread bias in science / policy / legal apparatus too, no-one on the inside so to speak really wants to actually ‘do the work’ given that may it come up with an ‘unacceptable’ answer, and I guess that’s the real issue. And actual attempts like Lew’s discussed here have essentially just embedded apocalyptic consequences into a formal expression that bypasses all other possibilities, a product of bias. Even for those contributors who merely point out in public such provisional reality constraints as mainstream science has already gotten to, e.g. as Lomborg does that the economic impacts of climate would be small compared to most other changes (I think was medium confidence, high agreement), which output doesn’t address uncertainty directly but for sure undermines the narrative of a high certainty of catastrophe (itself claiming to be supported by science), tend to get it with both barrels.

LikeLiked by 1 person

It was nearly seven years ago, that exchange. What surprised me was not who did or did not ‘win’, but the unwillingness to debate across domains and lines of disagreement. (Of course, I did not expect Lew to come flocking with apologies. Neither did I expect any grace from weasel-Stoat. But since the Bible was translated into English, the expectation of experts is that they *ought* to be able to explain to their lessers, even if they demand deference.) The closest thing I can recall to a material criticism was that I had not understood the nuances of the term ‘expected’. But Lew had claimed that ‘basic mathematics’, not degree-level statistics, were instructive, to a lay audience, and that was not the only word-play on show.

What motivated my criticism was the understanding that the Precautionary Principle is ‘risk assessment without numbers’. It seems also to be geometry without area or space. This leaves the connection between models and the real world in question. Accordingly, it raises a question mark over the experts’ –deciding What is To Be Done — connection to the real world, they claiming to be the only ones competent to decide our future. The obvious flaw being, in my view, not only that the variables can be provided to suit whatever argument is required, the parameters themselves cannot be debated.

I can see why it would be irritating, to have some mere Arts graduate — relatively freshly minted, at that — ask questions about the form of politics that emerges out of putting our future entirely in the hands of risk-modellers. Yes, I can see that it makes sense, when certain forms of decision need to be made, to model risk. Yes, I was challenging the authority of statistics without having a background in statistics. The point being, however, that there comes a point at which models deny human agency. (Where ‘agency’ is the capacity to make decisions, not ‘agency’ as used by greens as synonymous with ‘guilt’).

From that perspective, the risk-modellers’ models do not look like an argument for a particular course of action, but for a particular form of social organisation — a political order, the parameters of which are determined by various ‘sciences’. It is not a coincidence that this form of ‘science’, or this new role for ‘science’ emerged at a time during which traditional politics — i.e. the democratic contest of ideology — narrowed and hollowed, and the domain of politics increasingly switched from the management of economics at the macro, to the micro-management of people. This tendency extended even to managing individuals’ inner experience, with governments attempting objective targets to improve measures of ‘subjective sense of well-being’ — malaise, of course, being a risk factor that could only a ‘quality of life barometer’ could detect. Software was developed which would calculate the risks foetuses would be exposed to in their family lives, based on socio-economic statistics, to allow government agencies to design interventions that would stop the foetus developing into an antisocial lout, a criminal or even a terrorist… and one day, a UKIP voter. The models began to make people.

I’m not particularly interested in the geometry of risk models. I am particularly interested in the application of risk models to the management of society, and the transformation of relationships in society that are the necessary condition and the likely consequences of that application, which results in the transformation of people. The denial of agency has its own consequences — risks — the dangers of which are tangible enough to be explored without modelling them. They create a passive population unable to judge and forbidden from judging risks. It precludes dissent, debate and democracy: things which we know are components of a society that tends towards cooperation (through whichever form of distribution people — non-experts — decide is best for them), justice (as guided by experts, but judged by lay people), and science (as experts, but not arbiters)… I could go on. This is because much of the judgements life forces us to make result not in science, but in art. Which is not to say painting and music, but that technical processes are both limited, and limiting, whereas *experience* is what ultimately improves judgement. If technical processes were not limited and limiting, it is very likely that they would not be useful.

I do not believe that the risk-modellers are, in all cases, doing science.

The lure of putting experts in charge of Spaceship Earth is obvious. But it only the self-appointed crew that imagine it to be a spaceship, with operating manuals, blueprints, and design tolerances. Others find it easier to cope with the messiness of life without their help, which is as often as not, a hindrance. Or worse. Jacob Bronowski said it best.

LikeLiked by 5 people

Manic,

“This discussion has not really dealt with the different perceptions of uncertainty.”

True. But there again, it wasn’t intended to. I make no specific claims regarding the scale or perception of uncertainties. The question is what the role of uncertainty in decision-making should be, i.e. do high levels of uncertainty justify the taking of action or should they, as sceptics maintain, be used to justify action deferral. My simplistic summary of the debate would be as follows:

Lewandowsky’s claim is that the sceptical argument for inaction is based upon the existence of uncertainty, i.e. we say it would be inappropriate to act whilst uncertainties exist. He presents an argument (based upon ECS uncertainty) that the uncertainty actually acts the other way, i.e. his is an argument for taking action under uncertainty. There is an assumption that sceptics argue instead for inaction under uncertainty because they do not share his understanding. This misrepresents the sceptics’ position in two important ways.

Firstly, it is precisely because sceptics do understand Lewandowsky’s point that they are distrustful of the claims for high levels of ECS uncertainty, particularly in light of the evidence that its magnitude is being sustained for political purposes: See for example:

Click to access PhDThesisJeroenvanderSluijs1997.pdf

I would add to that my own suspicion that the techniques used to analyse and formulate ECS uncertainty are being misused. I have presented evidence in my previous comment that pressures to misuse them were being applied by the IPCC back in 1990. They now appear to have succeeded in that purpose. I am surprised that this hasn’t engendered greater concern.

Secondly, what sceptics do argue is that uncertainties relating to casual factors increase the risk of risk inefficiency. This is important because there are substantial risks associated with being risk inefficient that may outweigh the putative benefits of a specified risk mitigation (ask Macron). This is an aspect that simply is not addressed by Lewandowsky’s focus upon ECS uncertainty.

I don’t dispute that the real objective should be to understand and specify the problem, but I’m sure that Lewandowsky would argue that his simple mathematics has already done an adequate job of that (I argue that it hasn’t). And whilst some of us may want to better understand and specify the problem, yet more are saying there is no time for that — such niceties hold little appeal in the face of a culturally embedded fear.

Andy,

“And as John has noted in this parish before, uncertainty principle approaches gain more weight away from the public domain and further into reality constraints (which also include legal / policy as well as scientific realities), albeit some of these approaches are essentially only foils that still allow acceptable allegiance to a cultural belief in catastrophe (and so emotive conviction) but via a less obviously incorrect route.”

Absolutely!

LikeLiked by 1 person

Here is that post — Turning Uncertainty into Certainty – Reinventing the Precautionary Principle

What happened since is that 2-degrees turned into 1.5 degrees. As I said, the variables can be changed to suit the argument, and the parameters may not be challenged. Oh, and Lew won an award and moved to the UK, where he suddenly became an expert in why people voted Brexit.

LikeLiked by 1 person

Ben,

Thank you for placing this article in its original perspective of seven years since. As readers of my articles will already know, I don’t do ‘current’.

It is also clear from your comment that my article had steered clear of some of your more substantive concerns at the time, preferring instead to focus simply upon the claim that you had been incorrect in accusing Lewandowsky of applying a precautionary argument. I believe that accusation was a key issue that devalued the conclusions that Lewandowsky was drawing.

Also, I say now that your misinterpretation of Lewandowsky’s allusions to expectation were not just as a result of your lack of a suitable background. I did have an understanding of utility theory and expected values when reading his articles, and yet it still wasn’t at all obvious to me that he was using the term ‘expect’ in the strict mathematical sense of the term. As you say, there seemed to be some wordplay there.

Finally, since you have brought up the subject of ‘human agency’ in the context of risk modelling, may I say that, throughout my career in risk management and critical systems safety analysis, the overriding sense I had of the risk models is that they were a means of bestowing a veneer of scientific respectability to human preconceptions.

LikeLiked by 2 people

Thanks John. And thank you for shedding light on the debate. I remember at the time discussing the problem of statistical expertise, I think with Geoff. It is a shame you were not around then. Not for the win, but for the debate that could, perhaps, have then happened, across those domains/disagreements. But then again, I think it likely that your apparent agreement with part of my argument is the cue for such a discussion to be terminated, not to begin. The calibration of consensus-enforcers’ moral compasses must start with a triangulation from their demonology, after all. To debate is to give succour.

It’s human preconceptions all the way down, isn’t it? I don’t think it’s necessarily a problem by itself. I’ve even said so, for e.g. when modelling projected ‘impacts’ — body bags — from Nth-order consequences of global warming. The problem comes when it’s forgotten that it begins with human preconceptions of one form or another. The ‘certainty’ emerges when it is assumed (and forgotten) that people cannot adapt to their circumstances, even when that adaptation (in the real sense of the word, not the meaning used by climate technocrats) is trivial, or would stand in a normal (i.e. non-climate) discussion as ‘wealth’.

LikeLike

Ben,

Great series, the ascent of man, and one of the most memorable clips from it, which humans as a whole still have not fully / deeply heeded.

“The ‘certainty’ emerges when it is assumed (and forgotten) that people cannot adapt to their circumstances, even when that adaptation (in the real sense of the word, not the meaning used by climate technocrats) is trivial, or would stand in a normal (i.e. non-climate) discussion as ‘wealth’.”

In the climate domain yes, with other things ‘forgotten’ too, which is to say suppressed by emotive conviction. But similar enforced certainties emerge in the context of religion, philosophy, science, and politics, or sometimes mixed elements, and have done so essentially always. I find it ironic that the all-encompassing blue-prints for living that these tend to generate (at the biggest scale), are not ultimately the result of theological or philosophical or scientific or political thought, which is a thin veneer on top, plus the justification, but of the raw instinct for the in-group all to sing off the same hymn-sheet. This also means that each of these enforced consensuses will necessarily create out-groups, which while they are demonised and fought with an everlasting hope of defeat, are in fact a fundamental feature that must accompany any such emergent consensus, so could only die with the death of the consensus itself.

LikeLike

Andy – Great series, the ascent of man, and one of the most memorable clips from it, which humans as a whole still have not fully / deeply heeded.

Some interesting facts about The Ascent of Man, and that clip.

David Attenborough commissioned the series, though over the course of my lifetime descended to Malthusian misanthropy.

Leo Szilárd — who Bronowski mentions — with Albert Einstein, wrote to President Roosevelt, to persuade him to develop the nuclear bomb before Germany did. After the war, both scientists wrote another letter, arguing that the military power the bomb had unleashed required the formation of World Government (their term). The basis of this government would, of course, be science.

I think this is what Bronowski means when he says science stands on the edge of error, and is a very human form of knowledge.

Scientists that continued Szilárd and Einstein’s political campaign used the (flawed, it turned out) atmospheric models they had developed to illustrate the dangers of nuclear war, to make the argument for World Government (still their term) to avert climate change.

We can only guess what they would have said, had Einstein, Bronowski or Szilárd lived on into the era of climate change. Were they really the masters of the insight they produced?

I don’t know where fat tails, thin tails, concave or convex curves come into it. History is useful though. Which is maybe why some climate alarmists are keen to deny it and delete it. Models are so much more obedient.

LikeLiked by 1 person

ANDY WEST @ 12:53 pm

There are three ways to view whether losses of 4% of global output in 2100 can be viewed as alarmist.

The way that is not alarmist is in the spectrum of opinion. Nordhaus and Lomborg are a one end, with the children’s crusade & Xtinction Rebellion at the other.

On your point, in the context of the huge economic growth it might seem trivial. But in today’s terms UK GDP is around 3.3% of global output, so losses on that scale would mean a number of natural catastrophes every year, possibly with deaths in the millions.

The way I believe that it is alarmist is based upon the assumptions within the economic modelling. In particular, there is. what I call, the “dumb economic actor” assumption. That is over decades, collectively people do not recognize that there is an increasingly warmer & chaotic environment. They do not adjust behaviors reducing the costs of random impacts. This belies experience. As countries become richer the impacts of random natural events has become less. For instance, EM-DAT database of all types of disasters shows the number of deaths from all recorded natural disasters has decreased, despite the massively improved data collection. People learn from the past. Also, as countries become richer they can better afford measures to counter the impacts. For instance, better building design to counter the impact of earthquakes.

From what you say in the rest of the comment I think we are roughly in agreement. My way of viewing the issue at that Lew is putting the belief at the forefront and making any evaluation of evidence, or adoption of techniques from other areas subservient to that. The “climate consensus” filter enables is one way. Those who question the dogmas are outed as “deniers of science”.

LikeLiked by 1 person

Ben, science stands on the edge of error. Climate science however, stands on the edge of terror.

LikeLiked by 1 person

JOHN RIDGWAY (17 Mar 19 2.57pm)

You say:

Lewandowsky does much more than this. In his article “Uncertainty is Not your Friend”

http://www.shapingtomorrowsworld.org/lewandowskyuncertainty_i.html

he says:

Lewandowsky rephrases “..we’re not certain that the problem’s there..” as meaning: “there is so much uncertainty that I am certain there isn’t a problem.”

It is not possible that a professor of psychology could be so stupid as to think that his rewording is a fair summary of the opinion quoted. Therefore he is liar and a charlatan. He was a liar and a charlatan in March 2012, even before his “Moon Hoax“ paper was published, and he’s been lying ever since.

I repeat my question, which you haven’t answered, and which can only be answered by someone with more statistical expertise than I possess, (including an understanding about what the Reverend Bayes was on about):

“Is it or is it not true, as I believe (on the basis of a philosophical hunch) that Lewandowsky, in claiming that a change in the distribution of estimations of climate sensitivity can effect a change in the probability of something happening in the real world, is engaging in magical thinking equivalent to me sticking pins in his effigy?

And I hope it bloody well hurts.

LikeLike

ANDY WEST (17 Mar 19 5.34pm) BEN PILE (17 Mar 19 7.37pm)

Because of Jacob Bronowski, whom I remember on a black and white telly in my childhood, though I never saw Ascent of Man. (Surely you two weren’t old enough to be watching highbrow telly then?)

Not every Eastern European immigrant can become a top scientist working for the British Government on bombing strategy during the war while at the same time being investigated by MI5 as a security risk; and also write a book on the mystical poetry of William Blake. A couple of Bronowski’s poems in the Penguin Book of Spanish Civil War Verse suggest that he fought there, though Wiki doesn’t say so. He didn’t like what he saw at Hiroshima and Nagasaki, and reported on in secret for the British government.

Bronowski was the kind of person the BBC used to hire to do its science programmes, though he never played in a Boys’ Band.

LikeLike

“History is useful though. Which is maybe why some climate alarmists are keen to deny it and delete it.”

History is indeed a great guide. Although subject to distortion by cultural lenses (and indeed straight deletion), no culture has ever been strong enough to wipe out all other views or prevent investigative repairs after its own power has faded. And history is replete with clues about how to recognise a cultural ‘truth’ from reality – however it may be promoted by science / religion / philosophy / politics or whatever combo thereof.

LikeLiked by 1 person

Geoff:

“Surely you two weren’t old enough to be watching highbrow telly then?”

Indeed I was, being of a serious bent for my age at the time, although in truth I didn’t see all the episodes until much later. Incidentally I have a large size fully illustrated complete works of William Blake, which a few years back inspired one of my science fiction stories.

LikeLike

> Willard, duly impressed by the calibre and metal of that projectile was more than happy to declare Stephan the winner.

Citation needed.

LikeLike

— Surely you two weren’t old enough to be watching highbrow telly then? —

I wasn’t born then. Luckily the Internet can direct us to when TV was worth watching. Rumour has it that one of Brian Cox’s series was an attempt to re-make The Ascent of Man, urged on by Attenborough. And what an abortion it was. The total opposite. One episode concluding…

Cox had watched too much Sagan (another of the anti-nuclear bomb scientists who traded in gloom) and perhaps dropped too much acid to make any sense. Bronowski had said that science does not turn people into numbers, but Cox turned people into not even numbers… No purpose, nothing special. I can see why he might think that.

LikeLiked by 1 person

While we are dusting off our ancient memories of science series on the TV, might I offer for your consideration James Burke’s Connections. Presented with wit and flourish, this was a tour-de-force, showing the non-linear march of knowledge and the often unexpected links between discoveries. The whole structure of the series was unique with the culmination development of each of the preceding episodes combining in the last programme with a down the throat view of a launching Titan rocket.

Much as I love Bronowski’s series (and I consider the scene in the Death Camp without peer), Burke’s effort was clever and better fitted the fast approaching technological age with its multiple interconnections and its rapid pace.

LikeLiked by 1 person

JOHN RIDGWAY @ 16 Mar 2:57 pm

Thanks for taking time to clarify in briefer terms to clarify your perspective. My own understanding of risk and uncertainty is more limited, being based upon microeconomics. However, the explanation of spending money to reduce the uncertainties in cost terms is quite clear. Or at least the policy proposals that would clearly violate any optimal risk-efficient strategy. With respect to climate mitigation, what violates the strategy is a costly policy that does not work, just as if the relationship between emissions and the costs of climate change impacts was precisely known. Spending money on a policy that fails to reduce emissions makes those spending the money worse off than doing nothing. It is not a trivial, or irrelevant issue. If like Nobel Laureate William Nordhaus, we assume that costly climate change will result from continued global CO2 emissions the result will be the same. That result comes from the reality of the Paris Agreement. The vast majority of countries have no policy intentions to cut their emissions. As the economic case for cutting emissions is one of minimizing the sum of climate change and policy costs, countries which spend money on cutting their emissions will be net worse off. Under the Nordhaus model, the world as a whole is worse slightly better off, with those countries that gain most being the non-policy countries. If within political leaders both want to rationally serve the best interests of their country and believe in catastrophic climate change, the best strategy is (a) to do as little as possible (b) to appear to be doing a lot (c) get other countries to spend real money on cutting emissions. I elaborated further in a post last week.

https://manicbeancounter.com/2019/03/15/nobel-laureate-william-nordhaus-demonstrates-that-climate-mitigation-will-make-a-nation-worse-off/

The mistake made by advocates of climate mitigation is to assume there is a single unitary policy-maker. Global political reality is far different. Extreme examples are when we, in the UK, are exhorted to eat less meat or turn down the thermostat by a couple of degrees to combat climate change.

From the perspective of those committing the resources to risk management, by far the best policy for catastrophic climate change is adaptation. With the rejection of near universal efforts to cut emissions, the alternative is to reduce the impacts locally, just as the design of buildings is adapted in earthquake areas. That pushes the onus of policy justification onto policy advocates, against the default position that policy will make people worse off. Without the input of the “scientists” people will adapt anyway.

LikeLike

Geoff,

“Is it or is it not true, as I believe (on the basis of a philosophical hunch) that Lewandowsky, in claiming that a change in the distribution of estimations of climate sensitivity can effect a change in the probability of something happening in the real world, is engaging in magical thinking equivalent to me sticking pins in his effigy?”

Of course Lewandowsky is engaging in magical thinking. Everyone who uses probability theory is.

As for not answering your initial question, it isn’t so much that I didn’t answer your question, rather I challenged the assumption you had been making regarding Lewandowsky’s understanding of the subject. Perhaps I should take more time to explain why I might have done that.

Firstly, you are quite right to suggest that, at the end of the day, this is a philosophical question, and isn’t one that can be answered using ‘simple mathematics’. At the root lies the rather awkward fact that there is no universally agreed conceptual framework for probability. ‘Probability’ is, in fact, a profoundly ambivalent term, and yet we all use it freely without feeling the need to explain ourselves (worse still, we are all quite happy to engage in mathematics based upon quantification and manipulation of ‘probability’). So when you, I or Lewandowsky speak of ‘probability’, in a given context, there is a good chance that we are referring to different things without realising it.

Specifically, we may be referring to something that is an expression of personal ignorance, or something that speaks of the inherent variability of the world. This matters because personal ignorance says nothing about the real world, and differences in probability merely reflect different mental states (within the same individual from time to time, or between different individuals). Conversely, if the term ‘probability’ is being used to speculate upon the state of a well-understood system that exhibits inherent variability, then different probabilities can only appertain to different, potential real-world system states. So what an individual thinks they are doing by changing probabilities very much depends upon the nature of the underlying uncertainty. If we think we are dealing with epistemic uncertainty, then we are using the concept of probability to deal with our gaps in knowledge – filling those gaps will change our probabilities without having any significance regarding the reality. If, however, you think you are dealing with uncertainty born of an inability to predict the future state of a well-defined stochastic system, then a change in probability reflects a changed reference to a real-world state.

So this is the big question: When Lewandowsky talks of widening a probability distribution, does he mean it to signify a broader range of ignorance within a community, or a higher degree of inherent variability of a system? The former would result from an admission of greater ignorance. The latter can happen in one of two ways

a) The system has actually changed with respect to its inherent variability, as observed.

b) One has discovered something about the inherent variability of the system that had been previously unknown, in which case, there is presumably a more narrow range of ignorance within the community.

The question then, isn’t whether Lew thinks that changing a probability density function is actually affecting the real world, but whether he thinks it reflects changes in the system under study or changes in our state of knowledge. Given the way in which these probability density functions are derived, I don’t think anyone can say one way or the other. There seems to be an unholy alliance of epistemic and aleatory uncertainty involved which leaves me (at least) quite unsure whether the probabilities are modelling a system or modelling the expert community that is studying it. I think this is what was meant by the 1990’s modeller I quoted above (16 Mar 4:41pm), when he said “who knows what it is”.

I’m guessing this hasn’t helped at all, but you have to at least give me marks for trying. I apologise if it is too long and rambling (it’s a difficult point to express) and I doubly apologise if I am covering stuff you already know.

LikeLiked by 4 people

I tried to post something similar to this a day or two ago, so if it shows up finally, please excuse the duplication.

Set aside for a moment Lew’s essentially circular redefinition of risk into a sciencey Pascal’s Wager.

“Climate” has always impacted us worldwide.

To deal with those impacts we have built canals, ditches, sea walls, levies, barrages, storm drains, etc. worldwide.

As we have seen in Houston and elsewhere, those adaptations wear out for many reasons. They seem to get about 30 years before they need piecemeal upgrades and repairs.

After about 50 years they seem to need large scale renovations.

All of this costs a lot of money.

Far in the future.

So, according to the consensus, we must spend our resources controlling CO2 to mitigate their vision of “climate change”. After all of these years of “communication” and studying the wicked denialist mind certainly the consensus promoters can show us the savings per ppm of CO2.

Where is the benefit?

Which expensive capital infrastructure will we be able to extend the life of?

Which ones will become redundant?

Or conversely, if nothing is s done, which projects will cost more to replace as they wear out?

So far all we have seem concretely are failed claims of slr: the islands aren’t disappearing to to climate change, along with a long boring lust of failed or falsified claims regarding storms, droughts, floods, etc.

In other words, we are asking Pascal to prove up the risk side of his Wager.

Because from what I can see Lew was no better at defining uncertainty than Pascal, and he is at least as circular and much more pompous and unpleasant.

[Sorry, got stuck in the spam folder. PM]

LikeLike

“…does he mean it to signify a broader range of ignorance within a community, or a higher degree of inherent variability of a system?”

Generically at least for consensus support if not for a any particular individual, I’d suggest that the constant appeal to authority (an inherent cultural feature unrelated to any relevant reality, such as the state of community knowledge or any physical states / observations of the climate system), largely excludes the epistemic angle. This presents as subconscious bias not a conscious choice, but is more not less powerful for that, and will likely lead to contradictions about what is claimed to be considered, and the actuality of the argument made. To admit to too much ignorance in the mainstream scientific community would be to invoke cultural policing and the black spot (aka a ‘denialism’ label), despite science must ordinarily consider what it doesn’t know. This is why scientific uncertainties are often presented as a dotting I’s and crossing T’s kind of level, rather than anything more fundamental – in science orientated forums they cannot literally be presented as non-existent (although that happens big-time in the public domain), because the inconsistency with scientific methods would be too obvious for this kind of engagement.

LikeLike

Willard,

I cite the following:

http://planet3.org/2012/08/24/incredibilism/

There, you state:

‘Once upon a time, Stephan Lewandowsky wrote a series of posts, the last one republished here, arguing for the inescapable implication of uncertainty. This implication, that lies beyond our story, was criticized by Ben Pile in a such a fashion that James Annan, the James Guthrie Award winner for 2010, called “a really strange attempt”.’

You then draw attention to an ‘interesting’ comment exchange, at Ben’s post, between Ben and Tom Fid, an exchange that commences with Tom stating “The premise of this post, that Lewandowsky is implicitly invoking the PP, is wrong.”

No doubt, you did this because it struck you that Tom Fid’s refutation of Ben’s position was a really strange attempt.

LikeLike

John, you say,

“The question then, isn’t whether Lew thinks that changing a probability density function is actually affecting the real world, but whether he thinks it reflects changes in the system under study or changes in our state of knowledge. Given the way in which these probability density functions are derived, I don’t think anyone can say one way or the other.”

As the system under study I presume to be the quasi-chaotic coupled atmosphere/ocean system, I cannot imagine that there would be any fundamental change to that system in any of our lifetimes, let alone Lew’s lunchtime. So must we therefore presume that it is necessarily changes in our state of knowledge of that system which would alter the shape of the probability density function, i.e. changes in our scientific assessment of the coupled ocean-atmosphere’s response principally to increasing GHGs plus the various short term (water vapour, cloud cover, aerosol/cloud interactions etc.) and long term (earth system) feedbacks which that increase in atmospheric GHGs induces? Which goes back to what I said before. Our state of knowledge has indeed changed. The expected response of the system has changed. Therefore the shape of the climate sensitivity pdf has changed – it’s got narrower, sharper and less fat-tailed. So if Lew were arguing the complete opposite now, as opposed to in 2012, given our present state of knowledge, that would be somewhat silly, which also renders his argument of 2012 – whatever its technical/philosophical merits (or lack of) back then somewhat obsolete for the purpose of the real world.

“There seems to be an unholy alliance of epistemic and aleatory uncertainty involved which leaves me (at least) quite unsure whether the probabilities are modelling a system or modelling the expert community that is studying it.”

This is a fair statement as knowledge is always a function of those engaged in the ‘knowing’. ‘Facts’ (knowledge, observations) can never be totally dissociated from the observer. Having said that, the world itself provides us with a benchmark against which we can measure our ‘facts’/observations and thus assign to them a degree of autonomy and independence. Thus science progresses, as the world conforms a little more each passing year to our expectations and as we model those expectations a little more accurately to reflect our growing knowledge of how the world is changing and has changed. Well, normal science that is. Climate science simply adjusts the observations to fit the theory! Hence the attempts to discredit Nic Lewis and Judith Curry’s climate sensitivity estimates by implying that the historical temperature record they worked with was biased or that their estimates of historical forcings were simply wrong.

LikeLiked by 1 person

> I cite the following: […]

I don’t see where you do in the post, John. Thank you nevertheless for the clarification.

Since you appeal to how we get things done in the real world, have you considered using a more practical example, like how a very big company manages the risks of an oil spill?

LikeLike

—Since you appeal to how we get things done in the real world…—

Oh look: a squirrel.

LikeLike

Ben,

Actually, I am not averse to a bit of squirrel hunting. Although I also cannot see how discussing oil company risk assessments can throw any light on this debate, it might still be fun to give it a go.

Having never worked for a big oil company, I am at a bit of a disadvantage. However, I did plan and orchestrate the achievement of ISO 14001 certification for my particular division of a FTSE 500 company. This required me to perform a comprehensive environmental hazard analysis and associated risk assessments, and so I have enough background to hold certain expectations regarding what would be the general approach taken for assessing oil spillage risk (actually, oil spillage did feature in my company’s HAZOP, but not to anywhere near the scale we are talking about here). But why ask me? Why don’t we look at what the International Association of Gas and Oil Producers has to say on the subject:

Click to access JIP-6-Oil-spill-risk-assessment.pdf

As I peruse the above, I have to say that I find nothing surprising. The key features of the approach that I would draw to your attention are:

• The establishment of thresholds for risk acceptability (as illustrated in the figure within this article)

• The identification and analysis of specific hazards

• The assessment of likelihoods associated with the hazards

• The application of the ALARP principle (as illustrated in the figure within this article)

• The undertaking of costs benefits analysis

You will also note the absence of the application of the precautionary principle or any decisions taken upon the basis of uncertainty alone. Risks are quantified and evaluated against thresholds; which implies that uncertainties are within the bounds required for reliable risk calculations and CBA.

So it is just as I expected. The example of big oil company risk assessments for oil spillage has no bearing upon the Lewandowsky argument for decision-making under uncertainty, other than to suggest that my explanation of the role of risk efficiency within the ALARP context has widespread applicability.

LikeLiked by 3 people

Willard,

When I said ‘I cite the following…’ I did not mean ‘I cited the following…’ Rather, I meant to say ‘I cite now the following…’ Perhaps I should have made this clearer.

LikeLiked by 1 person

> Perhaps I should have made this clearer.

Citing the post you target would be even clearer, John. It is still time to put a link. Readers who’d click on the link would realize that you were referring to the appendix of a 2012 post about incredibilism, a different topic than yours, featuring not the chap you’re defending right now, but Geoff and a breastly example.

Do you really dispute the point that uncertainty costs money? That’s all one need to accept to concede that uncertainty is no contrarian friend. Even Carrick accepts that. He mentions a paper on the effect of uncertainty shocks on oil prices in the comments of the only post you cite in your parable.

Thank you for the oil spill report.

LikeLike

Present and correct.

LikeLike

JOHN RIDGWAY (18 Mar 19 11.04am)

Many thanks for your excellent explanation for the statistically challenged, and for your patience. In the interest of peace and quiet and of not boring everyone silly, I should leave it there. However, skating over embarrassing differences is what climate scientists do, not what we do.

You say:

Exactly. Lewandowsky is an example of the first case. His graph is not describing anything in the real world. His data are estimates of climate sensitivity, which for our purposes is a constant. Therefore all the values proposed except one are false. It matters not a jot for the purpose of Lewandowsky’s argument whether they are the serious results of peer-reviewed science or wild guesses. Most of them are wrong, by definition.

Lewandowsky then performs a thought experiment, and imagines what would happen if we knew less than we do, and concludes that, in the case of greater uncertainty, the peak of the skewed distribution would erode away mostly to the right, making the world a more dangerous place.

This is silly for a number of reasons, including the ones you give, and mine, which is that Lewandowsky’s assumption of an erosion towards higher values is based on the assumption that climate sensitivity cannot be negative, and that therefore his skewed graph is bounded at zero, which, I suggest, is false. (There may be good scientific reasons for believing it to be positive, but a negative value can’t be ruled out a priori.) I may be wrong here, but so far no-one has said so.

But these objections pale into insignificance in comparison with my objection that Lewandowsky is indulging in magical thinking. (And I do not accept your point that all use of probability there is magical thinking.)

There’s a graph around plotting estimates of climate sensitivity against time of publication, showing clearly that recent estimates in the peer reviewed literature are lower, and earlier estimates are higher, and more scattered. Someone even drew a trend line indicating the date at which climate sensitivity would tend to zero. But they did it as a joke. No-one on the sceptical side would be so stupid as to believe that you could derive a mathematical truth from a distribution of human thoughts, be they in the form of a frequency distribution à la Lewandowsky or plotted against time. But that’s what Lewandowsky has done. He has added nothing to our knowledge of climate science or of statistics.His contribution is in the field of pure mathematics. He claims that, for mathematical reasons, the world would get warmer if we didn’t know as much as we do about climate sensitivity. If a tsunami were to strike the next COP meeting, wiping out 97% of climate scientists and increasing our uncertainty about the true value of climate sensitivity, it follows mathematically that the temperature would rise.

Look, I know I have a thing about Lew, but I don’t wish him ill. Security of tenure is a necessary guarantee of academic freedom and all that, and if Bristol University Psychology Department want to continue to employ him to clear out the cages of the lab rats that’s fine by me. He’s not the first scientific genius to have mental issues. Tycho Brahé was a weirdo by all accounts and Gödel wasn’t in peak mental form.

Peter Pan thought that every time a child said they didn’t believe in fairies, a fairy died, so Lew is not alone in believing that the way we think about the world may influence the way the world is. It’s a point of view. I think it needs discussing, that’s all.

LikeLiked by 1 person

Willard,

Your objections to my belated citation of the ‘incredibilsm’ article (having not provided an embedded link within the article) are baseless. Irrespective of your article’s primary purpose, it was, nevertheless, a place where you chose to declare your allegiance with regard to the Lewandowsky papers, and that is all that matters. I knew what your position was when I wrote my article. You knew what your position was when you read it. Now everyone knows it. The rest is immaterial.

“Do you really dispute the point that uncertainty costs money?”

If you honestly think that my article is attempting to deny a link between uncertainty and cost then, from my perspective, you are a time waster. That means I’m done with chasing your squirrels.

LikeLiked by 1 person

> I knew what your position was when I wrote my article. You knew what your position was when you read it. Now everyone knows it. The rest is immaterial.

“Immaterial” is a fitting term for an article that does not cite the 2012 post it tries to attack, John. Unless you can contradict James’ claim that Lew’s point follows from the convexity of the damage function, i.e. more uncertainty leads to higher cost, bashing the precautionary principle (PP) does not imply what you make it imply.

Speaking of which, you still haven’t identified which PP you repudiate. Here’s one version:

Click to access ns-tast-gd-005.pdf

As you can see, ALARP and PP are far from being incompatible. They don’t even operate at the same level. If ALARP was enough to justify its decisions, a company could use it to bypass its statury duties. I hope you don’t argue for that kind of libertarian unicorn.

LikeLike

Geoff,

“However, skating over embarrassing differences is what climate scientists do, not what we do.”

This would appear to be the case, since I have received within this thread far more challenging commentary from yourself, Jaime, Manic and Andy than I have from the supposed opposition, i.e. ATTP and Willard. However, as far as skating over anything is concerned, that is precisely what I would now wish to do. You see, my main concern is that I don’t want to speculate upon anyone’s mental state, or even become too embroiled in a dispute over what Lewandowsky does and does not think he is doing when he pontificates over a particular probability density function. He could be misinterpreting subjective probabilities as objective ones, but I can’t be sure. Besides, who amongst us can, with hand on heart, say they have never made that mistake? It isn’t a cardinal sin.

However, what is a cardinal sin (quite literally) is to proffer an ordinal argument for making decisions under uncertainty, and then act as though one isn’t invoking the precautionary principle (which is precisely what one is doing when cardinal risk assessment is eschewed). Even worse would be to make an argument for taking action based purely upon the effect of uncertainty on expected costs without taking into account the expected cost of risk inefficiency and the effect that uncertainty may have on that.

I’m not saying I have finished with this thread. I still owe Manic and Jaime a response and I’m also tempted to introduce what Nassim Taleb thinks about this sort of thing.